何百万人ものユーザーのためのシステム設計 (パート1)

何百万人ものユーザーをサポートするシステムを設計することは小さな課題ではなく、継続的かつ継続的な改善が必要です。 この記事では、1人のユーザーをサポートし、徐々に数百万人のユーザーに拡大するシステムを構築します。

2022年03月28日

何百万人ものユーザーをサポートするシステムを設計することは小さな課題ではなく、継続的かつ継続的な改善が必要です。 この記事では、1人のユーザーをサポートし、徐々に数百万人のユーザーに拡大するシステムを構築します。

何百万人ものユーザーをサポートするシステムを設計することは小さな課題ではなく、継続的かつ継続的な改善が必要です。 この記事では、1人のユーザーをサポートし、徐々に数百万人のユーザーに拡大するシステムを構築します。

1. 単一システムの構成

開始するときは、すべてを単一のサーバーで実行します。 次の図は、Webアプリケーション、データベース、キャッシュなど、サーバー上ですべてを実行するようにサーバーを設定する方法を示しています。

この設定を理解するため、リクエストフローとトラフィックソースについて調べます。

まず、リクエストフローを見てみましょう。

1.ユーザーは、api.mysite.comなどのドメイン名を介してWebサイトにアクセスします。 ウェブサイトのドメイン名はDNSから提供されます。DNS(ドメインネームシステム)は、ドメイン名を提供するサードパーティの有料サービスであり、サーバーでホストされていません。

2.ブラウザまたはモバイルアプリケーションから返されたインターネットプロトコル(IP)アドレス。 この例では、返されるIPアドレスは15.125.23.214です。

- IPアドレスが取得されると、ハイパーテキスト転送プロトコル(HTTP)[1]がWebサーバーに直接送信されます。

- Webサーバーは、応答抽出でHTMLまたはJSONページを返します。

次に、トラフィックソースを確認します。 Webサーバーのトラフィックは、Webアプリケーションとモバイルアプリケーションの2つのソースから発生します。

・Webアプリケーション:サーバー側の言語(Java、Pythonなど)を組み合わせて、ビジネスロジック、ストレージ、およびクライアント側の言語(HTML、JS)を処理してアプリケーションを表現します。

・モバイルアプリケーション:HTTPプロトコルは、モバイルアプリケーションとWebサーバー間の通信プロトコルです。 JSONは、単純なデータ変換の応答API形式に一般的に使用されます。

2. データベース

ユーザー数が徐々に増えるにつれ、1台のサーバーでは不十分であり、Web・モバイルアクセス用に1台、データベース用にもう1台必要です。 アクセスサーバー(Web層)とデータベースサーバー(データ層)を分離することで、それらを独立して拡張できます。

データベースの選択

SQLまたはNoSQLタイプから選択できます。リレーショナルデータベース(SQL)は、RDBMS(リレーショナルデータベース管理システム)とも呼ばれます。 MySQL、Oracle、PostgreSQLなどの一般的な名前があります。SQLはデータを表し、テーブルと行に格納します。 SQLの異なるテーブル間で結合操作を実行することが可能です。

非リレーショナルデータベース(NoSQL)には、MongoDB、Cassandra、Neo4j、Redisなどの一般的な名前があります。

非リレーショナルデータベースには、キー値、ドキュメント指向、列指向、グラフ指向の4種類があります。

結合操作はNoSQLではサポートされていません。

ほとんどの開発者にとって、SQLは40年以上の開発期間があり、多くのドキュメントと強力なコミュニティがあるため、より良い選択です。 ただし、特別な場合には、次の理由で代わりにNoSQLを選択できます。

・アプリケーションは非常に低いレイテンシーを必要とします。

・非構造化データまたは無関係のデータ。

・データ(JSON、XMLなど)をシリアル化および逆シリアル化する必要があります。

・大量のデータを保存する必要があります。

3. スケールアップとスケールアウト

スケールアップ(システムを大きくする)とは、サーバーにハードウェア(CPU、RAMなど)を追加するプロセスを意味します。スケールアウトを使用すると、リソースに複数のサーバーを追加できます。

トラフィックが少ない場合、スケールアップは問題を単純化するための優れたソリューションです。残念ながら、重大な制限があります。

・サーバーに無制限のCPUとメモリを追加できないため、スケールアップが制限されます

・自動変換やフェールオーバーなしのスケールアップ。サーバーがダウンすると、アプリケーションとWebの両方が完全にクラッシュします。

・スケールアウトは、スケールアップよりも大規模なアプリケーションのスケーリングに適しています。

以前の設計では、ユーザーはWebサーバーに直接接続されていました。サーバーがオフラインの場合、ユーザーはWebサイトにアクセスできなくなります。また、多くのユーザーが同時にWebサーバーにアクセスすると、サーバーが過負荷になり、ユーザーの応答が遅くなったり、サーバーに接続できなくなったりする場合があります。この時点で、ロードバランサーはこの問題を解決するための最良の手法です。

4. ロードバランサー

ロードバランサーは、ロードバランサーで定義されたWebサーバー間でトラフィックを均等に分散します。

上記のように、ユーザーはロードバランサーのパブリックIPアドレスに直接接続します。上記の設定では、クライアントがWebサーバーに直接アクセスすることはできません。セキュリティを強化するために、サーバー間の通信にはプライベートIPが使用されます。プライベートIPは、同じネットワーク内のサーバー間で到達可能であるが、外部インターネットからは到達できないIPアドレスです。ロードバランサーは、プライベートIPを介してWebサーバーと通信します。

ロードバランサーの後には2つのWebサーバーがあるため、自動切り替えの問題が解決され、Webの使いやすさが向上します。詳細は以下の通りです。

・サーバー1がオフラインの場合、アクセスはサーバー2に転送されます。これにより、Webサイトがクラッシュするのを防ぎます。また、負荷分散のための新しい健全なWebサーバーを追加します。

・Webトラフィックが急増した場合、2台のサーバーで十分に処理できない場合は、ロードバランサーで適切に処理できます。サーバーをダウンロードして近くのWebサーバーグループに追加するだけで、サーバーにリクエストが自動的に送信されます。

・これでWeb層は問題ありませんが、データ層は問題ありません。現在の設計にはデータベースが1つしかないため、自動およびフェイルオーバーはサポートされていません。データベースレプリケーションは、この問題を解決するための一般的な手法です。

5. データベースレプリケーション

データベースレプリケーションは、複数のデータベースを管理するシステムで使用できます。通常は、マスターとレプリカ(スレーブ)間のマスター/スレーブ関係になります。

マスターデータベースは書き込み操作のみをサポートします。 一方、スレーブデータベースは、マスターデータベースからレプリケーションデータを取得し、読み取り操作のみを提供します。 INSERT、DELETE、UPDATEなどのすべてのデータ編集コマンドがマスターデータベースに送信されます。 ほとんどのアプリケーションは書き込みアクセスよりも読み取りアクセスを必要とするため、システムにはマスターデータベースよりも多くのスレーブデータベースがあります。

データベースレプリケーションの利点。

・よりよい性能

マスタースレーブモデルでは、すべての書き込みと更新はマスターで行われ、読み取り操作はスレーブ全体に分散されます。 このモデルでは、クエリを並行して処理できるため、パフォーマンスが向上します。

・信頼性

ハリケーンや地震などの自然災害によってデータベースの1つが破壊された場合でも、データを復元できます。 複数の場所にコピーされるため、データの損失について心配する必要はありません。

・高可用性

データは複数の場所にコピーされるため、一方のデータベースがオフラインの場合でも、もう一方のデータベースにアクセスできます。

次の図は、データベースレプリカにロードバランサーを追加する方法を示しています。

上の画像から、次のことがわかります。

・ユーザーはDNSからロードバランサーのIPアドレスを取得します。

・ユーザーはこのIPアドレスからロードバランサに接続します。

・HTTPはサーバー1またはサーバー2へのリダイレクトが必要です。

・Webサーバーはスレーブデータベースからユーザーデータを読み取ります。

・Webサーバーは、データ編集操作をマスターデータベースに転送します。 これらの操作は、追加、編集、および削除できます。

6.キャッシュ

キャッシュは、頻繁にアクセスされる応答の結果をデータメモリに保存するための一時的なストレージであるため、後続の要求への応答が速くなります。 図1-6に示すように、Webページがリロードされるたびに、1つ以上のデータベースが呼び出されてデータがフェッチされます。 アプリケーションのパフォーマンスは、これらの重複した呼び出しによって影響を受ける可能性があります。 キャッシュは上記の問題を解決できます。

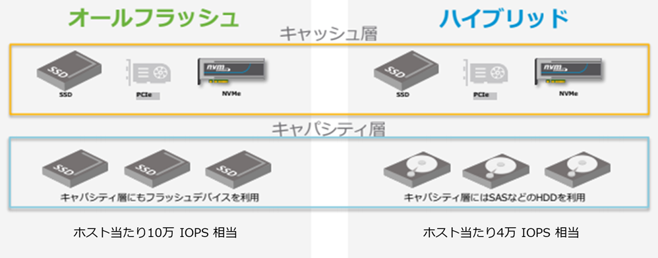

7.キャッシュ層

キャッシュ層は、データベースよりも高速な一時データを格納するレイヤーです。 個別のキャッシュ層の利点は、システムパフォーマンスの向上、データベースの負荷の軽減、および独立したキャッシュのスケーラビリティです。 次の図は、キャッシュサーバーのセットアップを示しています。

要求を受信した後、Webサーバーはキャッシュが応答に使用できるかどうかを確認します。 その場合は、データをクライアントに送り返します。 それ以外の場合は、データベースにクエリを実行し、応答をキャッシュしてクライアントに送り返します。 このキャッシュ戦略は、リードスルーキャッシュと呼ばれます。

キャッシュの使用に関する問題

・いつキャッシュを使用するかを決定します。データを頻繁に読み取り、編集をほとんど行わない場合は、キャッシュの使用を検討してください。キャッシュされたデータは揮発性メモリに保存されるため、サーバーキャッシュは永続データには適していません。たとえば、キャッシュサーバーを再起動すると、すべてのデータが失われるため、重要なデータを長期記憶に保存する必要があります。

・ポリシーの有効期限が切れます。キャッシュされたデータが期限切れになるたびに、キャッシュからクリアされます。有効期限ポリシーがない場合、キャッシュされたデータは永続的に保存されます。

・一貫性:キャッシュされたデータと保存されたデータの同期を維持することを含みます。データベースとキャッシュのデータ操作が単一のトランザクション内にない場合、不整合が発生する可能性があります。複数の地域にまたがってスケーリングする場合、データベースとキャッシュ間の整合性を維持することは困難です。

・障害の軽減:単一サーバーキャッシュをSPOF(単一障害点)にすることができます。ウィキペディアで定義されているように、「単一障害点(SPOF)はシステムのコンポーネントであり、障害が発生すると、システム全体が機能しなくなります」。したがって、異なるデータセンター上の複数のサーバーキャッシュはSPOFを回避します。もう1つのアプローチは、必要なメモリを特定の割合でオーバープロビジョニングすることです。これにより、メモリ使用量が増加するにつれてバッファが提供されます。

オフショア開発でシステムをご検討されている方々はぜひ一度ご相談ください。

※以下通り弊社の連絡先

アカウントマネージャー: クアン(日本語・英語対応可)

電話番号: (+84)2462 900 388

お電話でのご相談/お申し込み等、お気軽にご連絡くださいませ。

- オフショア開発

- エンジニア人材派遣

- ラボ開発

- ソフトウェアテスト

電話番号: (+84)2462 900 388

メール: contact@hachinet.com

お電話でのご相談/お申し込み等、お気軽にご連絡くださいませ。

無料見積もりはこちらから