ビデオ会議アプリケーションを構築するためのソリューションビデオ/オーディオコール

テクノロジーについて学ぶ前に、Google Hangouts などの音声/ビデオ通話ツールを使用したことを覚えていますか? 多くの人が同時に参加するようなスムーズなビデオ通話のウェブサイトを、彼らがどのように作るのか疑問に思いましたか? これらのアプリケーションは、WebRTCと呼ばれるテクノロジーを中心に構築されている。

2020年07月08日

テクノロジーについて学ぶ前に、Google Hangouts などの音声/ビデオ通話ツールを使用したことを覚えていますか? 多くの人が同時に参加するようなスムーズなビデオ通話のウェブサイトを、彼らがどのように作るのか疑問に思いましたか? これらのアプリケーションは、WebRTCと呼ばれるテクノロジーを中心に構築されている。

テクノロジーについて学ぶ前に、Google Hangouts などの音声/ビデオ通話ツールを使用したことを覚えていますか? 多くの人が同時に参加するようなスムーズなビデオ通話のウェブサイトを、彼らがどのように作るのか疑問に思いましたか?

これらのアプリケーションは、WebRTCと呼ばれるテクノロジーを中心に構築されている。

WebRTCとは?

WebRTC(Webリアルタイム通信)は、WebアプリケーションがP2Pプロトコルを使用して2つのデバイス間でデータを交換し、Webアプリケーションがオーディオ、ビデオ、およびビデオ通話を行えるようにするテクノロジである。 ファイル、画面共有など、サードパーティのサポートは必要がない。追加のプラグインをインストールする必要はない。

WebRTCはまだ開発中であり、ほとんどのブラウザーでサポートされているが、そのサポートは実際には一貫していない。 この問題の解決策の1つは、記事の最後にあるリファレンスで読んだアダプターを使用することである。

これはブラウザでサポートされているテクノロジであるため、2つのブラウザを2点で使用すると、中間サーバーにデータを転送せずに相互に対話でき、信号をより速く、より低いレイテンシで送信できる。 さらに、アプリケーションはより効率的に動作する。 今日、オンライン会議製品の開発で非常に人気がある。

ブラウザでWebRTC APIがどのようにサポートされるかを学ぶ前に、2つのマシンが相互に直接通信する方法について学ぶ必要がある。これは、テクノロジーの中核である。

Private/Public IP プライベート/パブリックIP

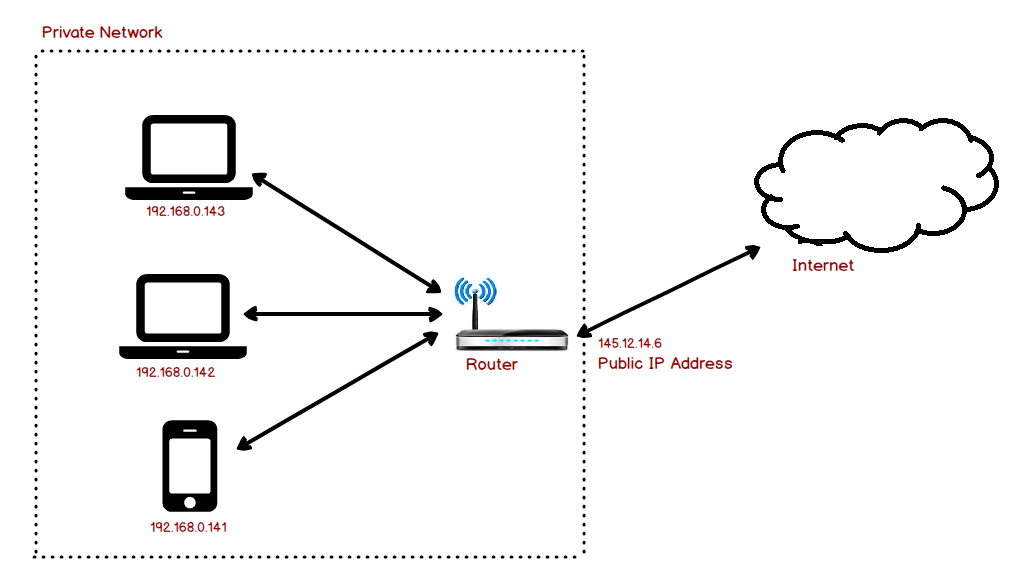

次の概念は、プライベートIPとパブリックIPの2つの概念に密接に関連している。

プライベート/パブリックIPの背後にある理論はかなり長く、すぐに分かる。なぜこのコンセプトなのか、どのような問題を解決するのか、...

ただし、この概念は次のように要約できる。プライベートIPはローカルエリアネットワークである。たとえば、会社のネットワークである。2つのローカルエリアネットワークは同じ構成で、お互いに「見えない」ことがある。 また、パブリックIPはインターネット上の単一のホストを識別するために使用され、一意である。

ICE (Interactive Connectivity Establishment )

通常、2つの異なるプライベートネットワークにある2台のコンピューターは、さまざまな理由で互いに通信できない。ICEは、この問題を解決するための手法である。この記事では、STUNを使用する。 /それを行うにはTURNサーバー。

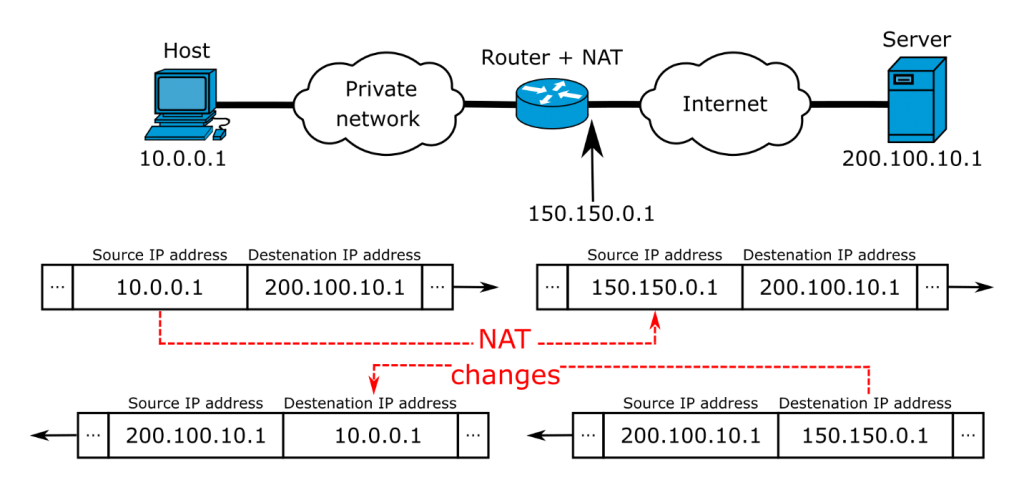

NAT (Network Address Translation)

パケットのIP情報をローカルネットワーク(プライベート)からパブリックネットワーク(インターネット)に変換する方法。 NATは、ローカルネットワーク上のコンピューターがパブリック環境の他のマシンと通信できるようにする。 ローカルネットワークからパブリックネットワークへ、またはその逆へのパケット情報の変換は、2つのネットワーク間に割り当てられたルーターで行われる。

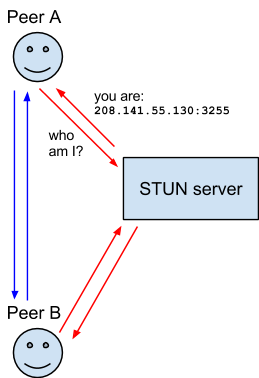

STUN (Session Traversal Utilities for NAT)

NATを使用する場合の、他のタイプのプロトコルの補助プロトコルとして。 これにより、NATのタイプ、パブリックIPアドレス、ローカルネットワークのマシンにNATが割り当てて接続するポートを決定できる。 STUNの制限は、対称NATでは機能しないことである。 STUNを使用するには、ピアに中間STUNサーバーが必要である。

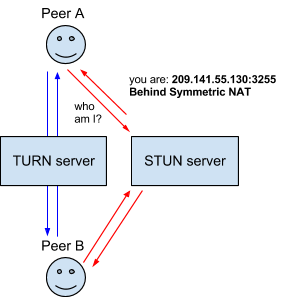

TURN (Traversal Using Relays around NAT)

STUNと同様に、TURNは2つのピア間を直接接続する代わりに、対称NATを使用したSTUNの欠点を克服するのに役立つ。TURNとのすべての接続とデータ交換は、TURNサーバーを介して行われる。 このオプションの欠点は、たとえば、数十人とビデオ通話をするときにサーバーを介して送信する必要がある場合、TURNサーバーは実際にはあまり良くないことである。

SDP (Session Description Protocol)

SDPは、解像度、エンコーディング、フォーマットなど、接続時に交換されるコンテンツを記述する標準である。

WebRTCの主な概念/ API

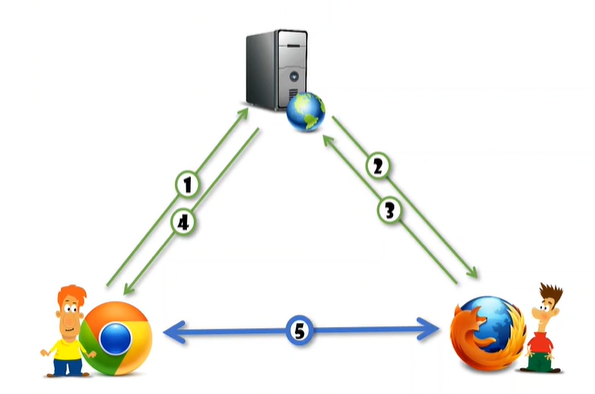

シグナリングサーバー

記事の冒頭から、WebRTCはP2Pプロトコルを使用して通信するテクノロジーである。この2つのマシンをどのように接続して通信することができるのでしょうか。 ピアが互いに通信する前に、2つのマシン間の接続をインストールするための中間層が必要である。つまり、中間にサーバーが必要である。このサーバーは、シグナリングサーバーと呼ばれることがよくある。

RTCPeerConnection

ブラウザによって提供されるWebRTC APIのインターフェースとして、ローカルマシンからリモートマシンへのWebRTC接続を示する。このインターフェースは、Webアプリケーションが接続を確立し、接続を維持し、接続を維持できるようにするメソッドを提供する。 接続を閉じ、会話が終了したら接続を閉じる。

MediaStream

ブラウザによって提供されるWebRTC APIのインターフェースは、接続が確立された後の2つのマシン間のデータ転送のストリームを示すインターフェースである。 会話のオーディオおよびビデオデータは各ストリームで送信される。このローカルマシンの入力は他のリモートの出力である。

RTCDataChannel

ブラウザによって提供されるWebRTC APIのインターフェースとして、接続が確立された後、データチャネルがその接続に割り当てられ、ピアは相互にデータ転送を実行できる。 P2Pプロトコルでは、理論的には、各接続に最大65,534のデータチャネルを接続できる。 ただし、実際の数はブラウザによって異なる。

WebRTC-P2Pアプリケーションの基本アーキテクチャ

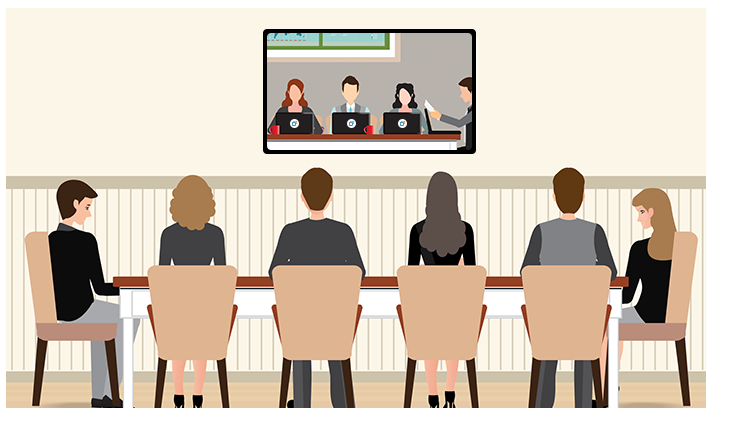

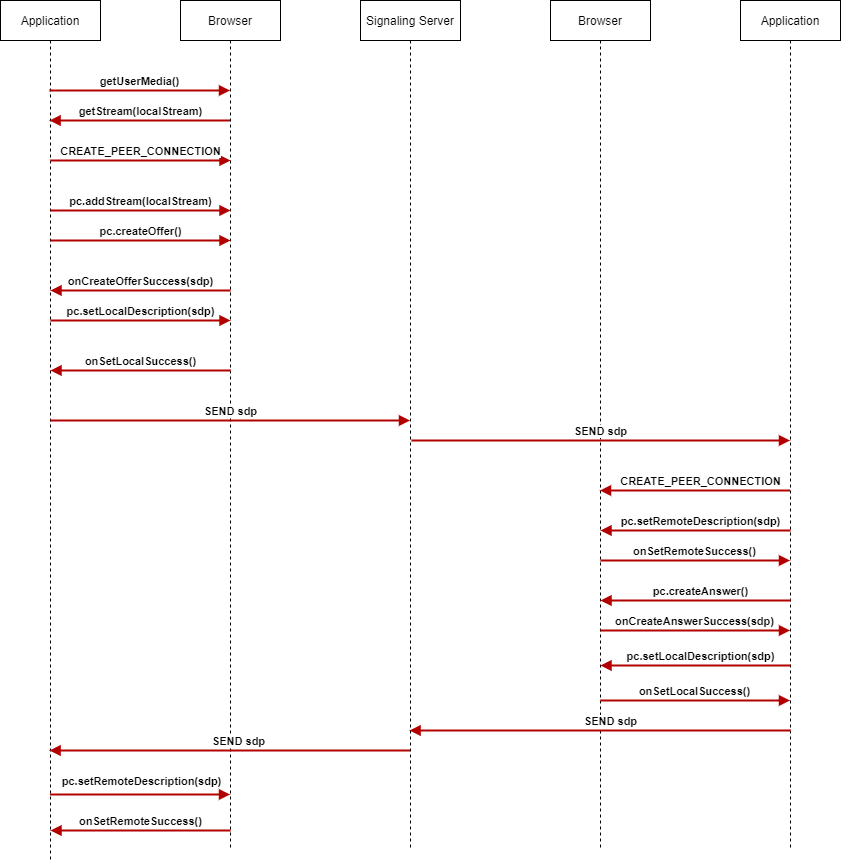

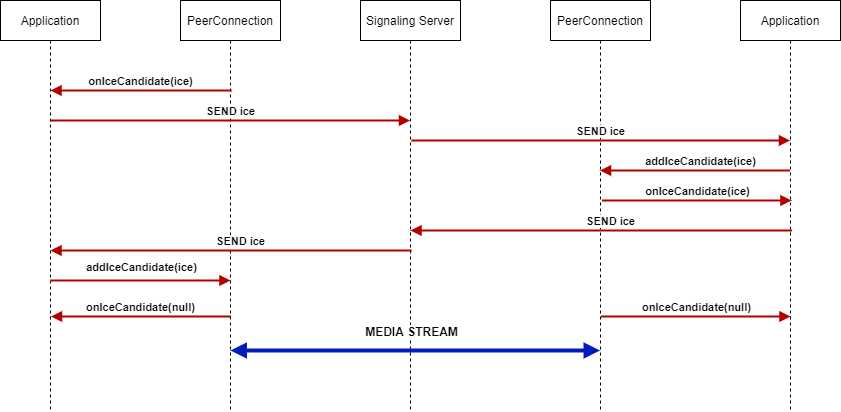

WebRTC(P2P)アプリケーションのアーキテクチャは非常に単純である。その動作方法は次の手順で説明されている。

- ユーザーAは、シグナリングサーバーでメッセージ(オファーSDP)を送信して、Bと話したいことを伝える。

- シグナリングサーバーは、Aが話したいことをBに通知する。

- B回答が受け入れられます(アンサーSDP)。

- シグナリングサーバーは、Bが受け入れたことをAに通知する。

- AとBの間で接続が確立される。ここから、AとBの間のビデオ、オーディオ、ファイルなどのすべての交換は、確立された接続を介して行われる。

詳細については、次のモデルの一連の手順を参照してください。2つのマシン間の接続を確立するためにAPIはどのように呼び出されるか?

サンプルアプリケーション

現在、WebRTCアプリケーションで利用できる完全なオープンソースが数多くある。 ただし、その大きなソースから始める前に、WebRTC APIに基づく小さな純粋なソースを試してみて、このテクノロジーをよりよく理解する必要がある。

このサンプルソースは、https://github.com/lapth/FirebaseRTC.gitから入手できる。フォークされたソースは、http://webrtc.orgによって提供されるhttps://github.com/webrtc/FirebaseRTCから入手できる。

この記事では、著者はFirebaseとFirebase Hostingに基づいたサンプルを選択して、Signaling Server / BEパーツについて話すプロセスを最小限に抑えている。 すべてのWebRTCアプリケーションのBE部分は、一般的な規則がないという事実に依存している。

このアプリケーションは非常に小さく、理解しやすいである。アプリケーションは2つの部分で構成されている。

- WebRTCアプリケーション管理に関連するコードについては、public / app.jsファイルを確認してください。 a) STUN / TURNの設定部分に注意してください。 これらのサーバーは例としてのみ使用されているが、実際には信頼できるプライベートサーバーが必要である。 b) スキムできるFirebaseコマンドに慣れていない場合は、RESTful CRUDステートメントとして理解できる。

- UIについては、ファイルpublic / index.htmlで確認できる。残りはFirebaseとHostingの設定である。無視してかまわない。

グループ会議のソリューション(マルチパーティビデオ会議)

この記事の前のセクションでは、P2PモデルにおけるWebRTCアプリケーションの基本的な概念とアーキテクチャについて説明しました。 次に、グループディスカッションのためにさまざまなアーキテクチャを引き続き探索する。

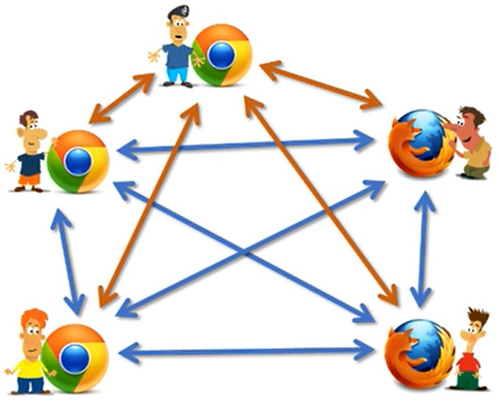

Mesh

メッシュアーキテクチャでは、ブラウザは互いに直接接続して、互いにデータを転送する。 各ブラウザーは、グループに参加している残りのブラウザーにデータを送信し、到着したブラウザーからデータを受信する。

利点:シンプルで低コスト、少人数のグループに効果的、通常4〜6名が関与。

短所:大規模なグループには適用できない。各ピアのデバイス、トラフィックのアップロード/ダウンロード、大規模なピアごとに異なる。

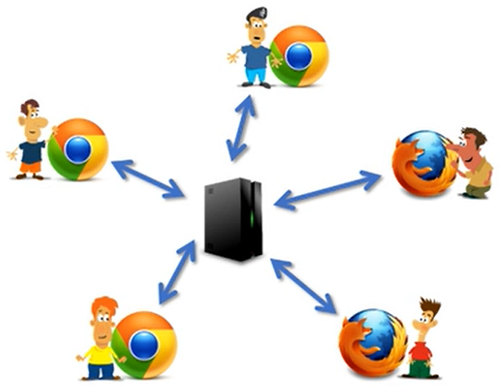

MCU (Multipoint Conferencing Unit)

メッシュとは異なり、ピアMCUソリューションでは、ピアが中央サーバーにデータを送信する。中央サーバーは、参加者から受信したストリームのデコード、ミキシング、1つへの再エンコードを担当する。 ストリーミングしてからパーティに渡す。

長所:メッシュの短所を解決し、各ピアにあまり多くのリソースを必要とする。

短所:参加者のデータを最小のレイテンシでデコードおよび再コード化するのに十分な強度を持つ中央サーバーが必要である。

SFU (Selective Forwarding Unit)

そして最後にSFUアーキテクチャ、このアーキテクチャでは依然として中央サーバーが必要であるが、MCUアーキテクチャとは異なり、このサーバーの使命は、ストリームからデータを受信するときにデータを転送することである。 つまり、中央サーバーはそのストリームを他の参加者に転送する。

これは、大規模なグループディスカッションに適したソリューションである。

あなたの会社に最高のサービスを得るには、メールアドレスでご連絡ください.

メール:contact@hachinet.com

- オフショア開発

- エンジニア人材派遣

- ラボ開発

- ソフトウェアテスト

電話番号: (+84)2462 900 388

メール: contact@hachinet.com

お電話でのご相談/お申し込み等、お気軽にご連絡くださいませ。

無料見積もりはこちらから